Causality versus Association#

As scientists, we are often looking for patterns or relations between variables. When there exists a pattern between two variables, we call this an association[1]. For example, time of day is associated with the traffic on Chicago’s Lake Shore Drive and temperature outside is associated with the number of people at Lake Michigan.

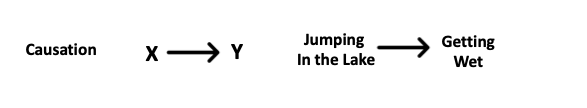

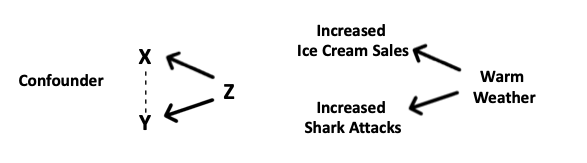

When we see that two variables X and Y are associated, we often wonder if one causes the other. Here are three common scenarios:

Causation: change in X causes change in Y (or vice-versa)

Common response: some other variable Z causes change in both X and Y

Colliding: changes in both X and Y cause change in some variable Z

Well-designed studies, which we will discuss further in the next section, can help distinguish between the three scenarios which are often depicted using causal graphs. A causal graph is a graph where each node depicts a variable and each edge is directed (an arrow) pointing in the direction of a cause. The figure below shows a causal graph as well as an example of a causal association.

When we see a causal association between X and Y we can depict it with an arrow from the cause to the effect. For example jumping in the lake is the direct cause of getting wet so the arrow is drawn from jumping in the lake to getting wet.

Common Response#

The causal graph figure shows below an association between X and Y (depicted by the dotted line) that is present due to the presence of a third variable, Z.

This other variable may be a lurking or a confounding variable depending on the situation. A lurking variable is a variable not directly measured or accounted for in a study that results in a spurious association. When planning a study, researchers try to avoid lurking variables by including as many potentially related variables as possible.

A confounding variable is a variable that is included in a study but that still results in a spurious association because it is not properly accounted for. Conditioning on a confounding variable is best practice to remove the spurious association between X and Y. Conditioning on a variable means looking at only one value of the conditioned variable. For example: suppose we have a dataset that contains information about beach events. We plot ice cream sales and number of swimmers at the beach and see that there is a positive association such that as ice cream sales increase so does the number of swimmers. Should we conclude that ice cream makes people want to go swimming? Thinking more deeply about the problem, we realize that more people go swimming when the weather is warm. Ice cream sales also increase during warm weather, therefore both variables have a common cause: weather. When we condition on weather and only consider ice cream sales and swimming in the summer months, the association disappears. However, it is important that we condition only on confounding variables. Conditioning on variables that are not confounders can introduce spurious associations as we will see later in this section.

An Illustrative Example of Confounding#

Consider the following dataset which contains information about cases of the Delta variant of COVID-19 in the UK[2].

covid = pd.read_csv("../../data/simpsons_paradox_covid.csv")

covid.head()

| age_group | vaccine_status | outcome | |

|---|---|---|---|

| 0 | under 50 | vaccinated | death |

| 1 | under 50 | vaccinated | death |

| 2 | under 50 | vaccinated | death |

| 3 | under 50 | vaccinated | death |

| 4 | under 50 | vaccinated | death |

This dataset contains three columns with information on the age of the individual, whether they received the COVID-19 vaccine, and whether they survived the disease. We are interested in the mortality rate for vaccinated and unvaccinated patients.

outcome_vax_table = covid.groupby(["vaccine_status","outcome"]).count().rename(columns={"age_group":"counts"})

# There are 151052 unvaccinated patients and 117114 vaccinated patients in the dataset

outcome_vax_table["proportion"] = outcome_vax_table['counts'] / [151052, 151052, 117114, 117114]

outcome_vax_table

| counts | proportion | ||

|---|---|---|---|

| vaccine_status | outcome | ||

| unvaccinated | death | 253 | 0.001675 |

| survived | 150799 | 0.998325 | |

| vaccinated | death | 481 | 0.004107 |

| survived | 116633 | 0.995893 |

You may be surprised to see that the proportion of patients who died was higher in the vaccinated group than in the non-vaccinated group. However, there is a confounding variable that affects both whether someone received the vaccine and risk of death from COVID-19: age. Those who are older are more at-risk for COVID-19 and also received more vaccines. We can see this from the table below:

age_vax_table = covid.groupby(["age_group","vaccine_status"]).count().rename(columns={"outcome":"counts"})

# There are 30747 patients over 50 and 237419 patients under 50 in the dataset

age_vax_table["proportion"] = age_vax_table['counts'] / [30747, 30747, 237419, 237419]

age_vax_table

| counts | proportion | ||

|---|---|---|---|

| age_group | vaccine_status | ||

| 50 + | unvaccinated | 3440 | 0.111881 |

| vaccinated | 27307 | 0.888119 | |

| under 50 | unvaccinated | 147612 | 0.621736 |

| vaccinated | 89807 | 0.378264 |

If we condition on this confounder, we can see the true relationship between vaccine_status and outcome.

covid['counter'] = 1 # Create this fourth variable since we will be grouping on all other variables

conditioned = covid.groupby(["age_group","vaccine_status","outcome"]).count()

conditioned["proportion"] = conditioned['counter'] / [3440, 3440, 27307, 27307, 147612, 147612, 89807, 89807]

conditioned

| counter | proportion | |||

|---|---|---|---|---|

| age_group | vaccine_status | outcome | ||

| 50 + | unvaccinated | death | 205 | 0.059593 |

| survived | 3235 | 0.940407 | ||

| vaccinated | death | 460 | 0.016845 | |

| survived | 26847 | 0.983155 | ||

| under 50 | unvaccinated | death | 48 | 0.000325 |

| survived | 147564 | 0.999675 | ||

| vaccinated | death | 21 | 0.000234 | |

| survived | 89786 | 0.999766 |

Looking at our conditioned data, we see that after accounting for age, those who received the vaccine had lower mortality rates than those who did not receive the vaccine. This reversal of a trend when groups are separated instead of combined is known as Simpson’s Paradox.

Colliding Variables#

The next causal graph figure depicts an association between X and Y that is due to conditioning on the collider variable, Z.

We see spurious associations between two variables X and Y when both are causes of a third variable Z and we are conditioning on Z[3]. For example: looking only at hospitalized patient data (conditioning on being hospitalized), we see a negative association between diabetes and heart disease such that those who have diabetes are less likely to have heart disease. However, it is known that diabetes is a risk factor of heart disease[4] – having diabetes makes you more likely to develop heart disease – so we should expect to see the opposite effect. This reversal in association occurs because we are only looking at hospitalized patients and both heart disease and diabetes are causes of hospitalization. Diabetes increases likelihood of heart disease and likelihood of hospitalization. Heart disease increases likelihood of hospitalization as well. If you are hospitalized for diabetes, it is less likely you also have heart disease. Therefore, those with diabetes in this sample of hospitalized patients have lower incidence of heart disease than those with diabetes in the general population, reversing the association between diabetes and heart disease.

Let’s consider another example. Suppose your friend is complaining about a recent date. The person she went to dinner with was very good-looking but had no sense of humor. Your friend comments that it seems all good-looking people have a bad sense of humor. You know that in reality looks and humor are not related. Your friend is conditioning on a collider by considering only people that she dates. She likely only dates people that meet a certain threshold of looks and humor. Those that are very good-looking may not need to have as good of a sense of humor to get a date whereas those who are less good-looking may need to have a better sense of humor. This creates a negative association between looks and humor that does not exist outside of her dating pool.

An Illustrative Example of Colliding#

Returning to our COVID-19 example, there is also a colliding variable such that both age and vaccine status affect mortality rate. Currently, this is not an issue because we are not conditioning on outcome. However, let’s return to our investigation of vaccine status. We noticed that more people aged over 50 received the vaccine than those under 50. What would happen if we conditioned on outcome?

collider = covid.groupby(["outcome","age_group","vaccine_status"]).count()

collider["proportion"] = collider['counter'] / [665, 665, 69, 69, 30082, 30082, 237350, 237350]

collider

| counter | proportion | |||

|---|---|---|---|---|

| outcome | age_group | vaccine_status | ||

| death | 50 + | unvaccinated | 205 | 0.308271 |

| vaccinated | 460 | 0.691729 | ||

| under 50 | unvaccinated | 48 | 0.695652 | |

| vaccinated | 21 | 0.304348 | ||

| survived | 50 + | unvaccinated | 3235 | 0.107539 |

| vaccinated | 26847 | 0.892461 | ||

| under 50 | unvaccinated | 147564 | 0.621715 | |

| vaccinated | 89786 | 0.378285 |

This gives us a much different idea of how many of each age group received the vaccine. For example, if we only had data on those who died, the data would tell us that only 69% of those over 50 are vaccinated when the true number is 89%. This is why it important to understand relationships between variables in your data before conducting analyses or making claims about association.